Amazon S3 (Simple Storage Service) provides safe, secure, highly-scalable object-based storage on the cloud. You only pay for what you use, have unlimited storage, and the sizes of individual files can be anywhere between 0 bytes and 5 terabytes.

Amazon S3 is one of the basic and important core systems in AWS. When an object is successfully uploaded, you will receive a HTTP 200 Code.

With S3, you can easily change the storage classes and the encryption policies of the Objects and Buckets. For redundancy, you can have contents replicated automatically by using Cross-Region Replication (CRR).

Amazon S3 “Objects” go into “Buckets”

When they say Objects, think Files – like images, HTML pages, .zip files. Each individual object can be as large as 5TBs, and you can upload unlimited number of files.

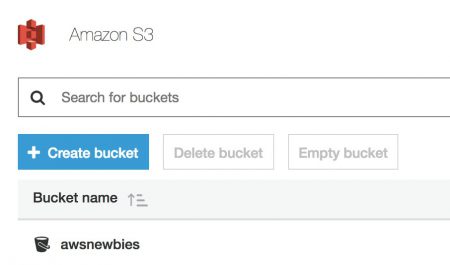

Buckets are where you keep the Objects. Each Bucket exists in a “global namespace.”

This means that no two buckets in ALL of AWS can have an identical name. This includes everyone else in the world who uses S3 – not just within your own account.

For example, I just went ahead and created an S3 Bucket called “awsnewbies.” This means that no one else using S3 in all of AWS infrastructure has a Bucket called “awsnewbies.”

The easiest analogy is your computer’s Folder (Bucket) where you keep your Files (Object), and you can’t have 2 Folders with the same name in the same level.

Access permissions can be set by Object level (ACL) or Bucket level (Bucket Policy).

What’s in an Object?

Objects consist of object data and metadata. Metadata is a set of name-value pairs that describe the object, such as “Department; Finance,” or “User; John.”

Parts of an Object:

- Key: Name of object

- Value: Data

- Version ID: Used for versioning (different iterations of the same file)

- Metadata: “Data about data”

- Subresources

- Access Control Lists (ACLs)

- Torrent

Availability and Durability of S3

Their durability guarantee is 99.999999999% (commonly referred to as “eleven 9’s of durability“). This is how unlikely a file is to get corrupted. Their availability guarantee is 99.99%. This is how likely a file is to be accessible.

Data Consistency Model for S3

- Read-After-Write Consistency for PUTS of new objects

- The changes are immediate when you upload a new file

- Eventual Consistency for overwrite PUTS and DELETES

- Edits and deletions can take time to propagate, which means you might get 2 versions for a while when you try to load the file until update is fully completed

Basically, if you uploaded a new Object, you can immediately see it, but if you edited or deleted an Object, you might get the pre or post edits for a while.

Amazon S3 Storage Classes

You can compare and contrast the different storage classes on AWS’s “Amazon S3 Storage Classes Overview Infographic.”

- Amazon S3 Intelligent-Tiering: provides cost savings by automatically moving objects between cost-optimized access tiers; Data lifecycle management

- Amazon S3 Standard: general storage for frequently accessed data (millisecond access)

- Amazon S3 Standard-Infrequent Access (S3 Standard-IA): lower-cost storage for data accessed monthly (milliseconds retrieval)

- Amazon S3 Glacier Instant Retrieval: lowest-cost storage for long-term archive data (milliseconds retrieval)

- Amazon S3 Glacier Flexible Retrieval: low-cost storage for archive/backup data

- 3 retrieval speeds: expedited (1~5 mins), standard (3-5 hours), bulk (12 hours)

- Amazon S3 Glacier Deep Archive: lowest-cost storage for long-term archives accessed once per year

- 2 retrieval speeds: standard (within 12 hours), bulk (within 48 hours)

- Amazon S3 One Zone-Infrequent Access (S3 One Zone-IA): performance of S3 Standard-IA stored in a single Availability Zone at 20% of the cost; ideal for secondary backups

- Amazon S3 on Outposts: store at on-premises AWS Outposts to meet local data processing/residency requirements

Charges and Billing

You can upload unlimited number of files that are up to 5TB in size each. But that doesn’t mean that everything is free. Here are some ways you can get charged for using S3:

- Storage: You pay for storage at rate based on the objects’ sizes, how long they are stored, and the storage class.

- Requests: You pay for requests put upon the objects, including lifecycle requests.

- Retrievals: You pay for retrieving objects in every storage class except S3 Standard.

- Early Deletes: You pay for deleting objects in SA Standard-IA/One Zone-IA/Glacier before the minimum storage commitment

- Storage Management: You pay for storage management enabled on the account’s buckets (ie: Amazon S3 inventory, analytics, object tagging)

- Data Transfer: Transferring data from one region to another (Cross-Region Replication)

- Transfer Acceleration: Fast and secure transfer of files over long distances between end users and S3 bucket using CloudFront

- Bandwidth: You pay for bandwidth in/out of S3 and data transferred using S3 Transfer Acceleration EXCEPT:

- Data transferred in from the internet

- Data transferred out to EC2 instance (when EC2 instance is in same region as S3 Bucket)

- Data transferred out to CloudFront

Security

Encryption

- Client Side Encryption

- Server Side Encryption

- SSE-S3: S3 Managed Keys

- SSE-KMS: Key Management Service

- SSE-C: Customer Provided Keys

Access Control

By default, all buckets and objects are set to be private.

- Access Control List: Bucket and File Level

- Bucket Policy: Bucket Level

Versioning

- Once you enable versioning, you can only suspend; never disable

- S3 will keep the files for every version, so should be cognizant of costs associated with keeping large file versions

- Versioning stores all versions of an object, including all writes and deletes

- MFA Delete Capability: protects against accidental deletion by requiring multi-factor authentication

- Integrates with Lifecycle rules

Cross-Region Replication

- Versioning must be enabled for both source and destination buckets

- Only for new objects are replicated

- Regions must be different (cross-region)

- Cannot daisy-chain to multiple buckets

- Use AWS CLI to transfer existing objects

- aws s3 cp –recursive s3://[source bucket] s3://[destination bucket]

- Deletion marker is replicated, but when the deletion marker or a version is deleted, that action is not replicated

Resources

- Amazon S3 (AWS)

- Amazon S3 FAQs (AWS KB)

- Amazon S3 Features (AWS)

- Amazon S3 Object Storage Classes (AWS KB)

Great work 🙂

I think the durability figure is how unlikely a file is to be corrupted, not how likely.